Solving the rendering equation with even just one bounce of indirect lighting can take a long time. The majority of time spent rendering a frame is in estimating the lighting integral. For example, rendering a single bounce of indirect lighting at 720p resolution with 256 sample rays for a Monte Carlo estimator requires about 237 million rays to be cast. This doesn’t even include the rays needed for sampling the lights for direct lighting, so in practice, the total will be even higher.

One interesting observation made by Greg Ward in his Siggraph ’88 paper is that contrary to direct lighting, where shadows and lights can cause harsh changes, the indirect lighting on a surface tends to vary relatively slowly. One way to picture why this is, is to imagine the computing average color from the what you can see from each of your eyes. Even though each eye has a slightly different view on the world, the images they see are nearly similar, and so the average color is also nearly the same.

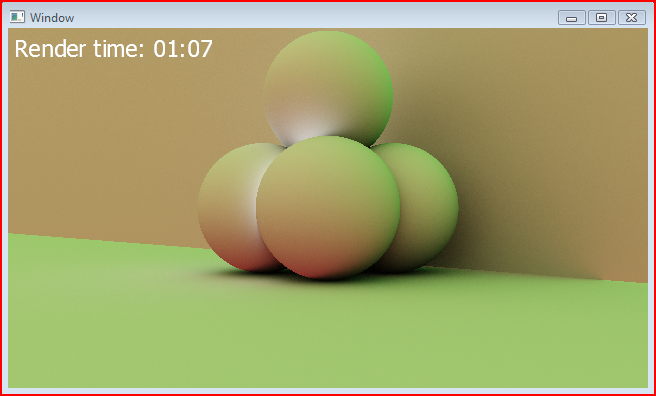

The image below shows the same scene from my previous post with just the indirect irradiance, and it’s pretty clear that for each surface, the lighting varies in a very smooth fashion.

Ward proposed using this knowledge to reduce the number of times that the Monte Carlo estimator was evaluated by interpolating between nearby previously calculated values. At the time he just called it ‘lazy evaluation’, which I personally think is a good way to picture the idea. Later it became known as irradiance caching.

Irradiance Caching

The basic concept for irradiance caching is really simple: For each point on a surface at which you want to evaluate irradiance, if the cache contains any valid entries then interpolate between them. Otherwise, calculate a new irradiance entry, and add it to the cache.

A cache entry contains the position and normal for the point on the surface where the irradiance was evaluated as well as the irradiance value itself. One important additional piece of information that the cache requires is the range over which the entry is considered potentially valid. This range could be calculated in a number of ways, but the most common one is to use the harmonic mean of the hit distance of the rays used for the estimator. For n estimator samples, each with hit distance d, the harmonic mean is simply:

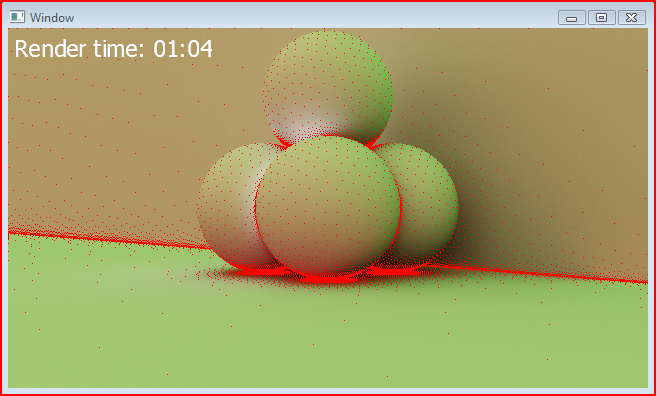

Using the harmonic mean distance makes the cache entry distribution very dense in corners and crevices, and sparse in open spaces. This matches up very well with where the indirect irradiance is likely to be changing the fastest. To get an idea of how the cache entry distribution looks, here’s the scene above with the cache entry positions shown as red dots:

Once you can add entries into the cache, you need to know how to find whether or not a particular cache entry can be used for interpolating the irradiance at a sample point. There are potentially quite a few ways that you can discard invalid cache entries depending on how fancy you want to get. For now, I’m using three simple tests.

Discard the entry if any of the following are true

- It is out of range of the sample point.

- It has a normal that is too different than the sample normal.

- It is in front of the sample point.

Once you have a valid cache entry, you need to calculate a weight for that entry, then carry on looking for other entries that are potentially valid. As you come across each valid cache entry, you need to keep the sum of the weighted irradiance values, and the sum of the weights themselves. From these two sums, you can calculate the final interpolated irradiance:

The weight for a particular cache entry is another part of the algorithm that can potentially be calculated in many different ways. For now, I’m using the weight that Ward proposes, but there’s some interesting information about the weights used at Dreamworks in this paper. Here’s Ward’s initial weighting function:

Note that you have to be a little bit wary of this function, since it is unbounded. When the sample point lies exactly at the same point as the cache entry then there you will get a divide by zero.

Typically, you would also discard cache entries that are below some weight threshold as specified by the user. This effectively scales the density of the cache entries and allows the user to make the trade off between speed and quality.

Implementation

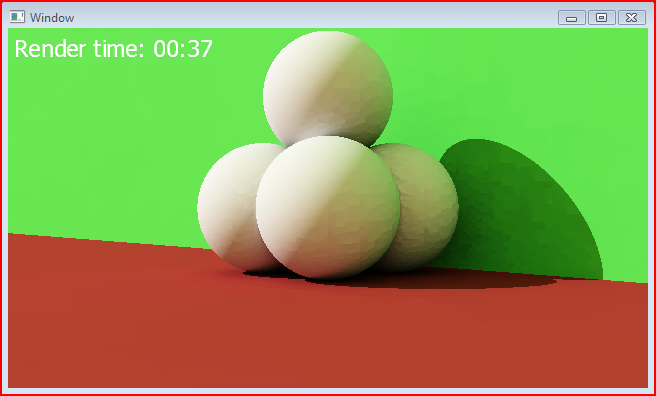

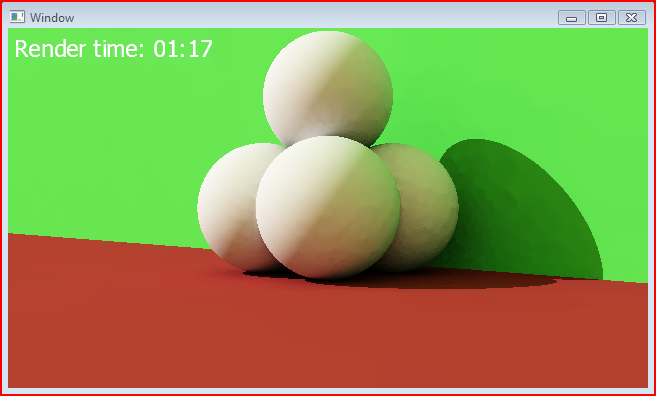

I’ve made a very bare bones implementation of irradiance caching as outlined above. At the moment I’m not using a quad tree to store the cache entries, so each cache check requires iterating through an array of entries. Clearly this is a very slow way to process the cache entries, but for now it does a decent enough job to allow me to focus on the irradiance caching algorithm itself. Here are the results:

Not very impressive, or smooth, is it? I was hoping that the simple implementation I have made would provide better results than this, but apparently not. At the moment there’s one crucial improvement to the algorithm that my implementation is missing though – Irradiance Gradients. Irradiance Gradients basically give a better clue as to how to interpolate the irradiance cache entries, both positionally and rotationally. I’m hoping that they will significantly reduce the artefacts visible at the moment.

One problem that can occur when using an irradiance cache is that later cache entries don’t contribute to previously rendered pixels. When this happens, you can see blocky artefacts where the irradiance values have been interpolated differently. Something like this:

One thing you can do to avoid this situation is to perform an irradiance gathering pass before doing the final render. When you perform the final render, you should have no cache misses. In my case, I am using a progressive renderer, so the cache is actually fairly well primed before rendering the 1×1 pixel size.

Improvements

In addition to irradiance gradients, there have been a load of improvements made to irradiance caching since the inital paper. The course notes for the Siggraph 2008 course provide details of many of these. I’ll post up some screenshots when I’ve added the irradiance gradients.

I was just about to write a irradiance cache and found your blog post on it. good stuff! D

I’m a student of Computer Graphics and I love your blog. Thanks for writing.

Thanks for the excellent posts on Irradiance Caching. Nice to have another programmers perspective while concurrently reading through Krivanek and Gauttron’s Practical Global Illumination with Irradiance Caching.

[…] Rory Driscoll provides a good introduction from a programmer’s point of view: part 1, part […]

Hello,

I have implemented Irradiance caching already with good results. I am trying to understand Irradiance GRADIENTS to add them to my application… Can u help? So we have translation and rotation gradients. Take the rotation gradient, is there a gradient for each color component of the irradiance estimate? When I sample final gathering rays, they return each with an RGB value, wich is the color of the radiance found by each vector. How do u compute the Gradient? Of course there are formulas, but the literature is vague about how u apply them to the RGB colors ot the each irradiance. Again, is there a Gradient for each R, G, B component, or u compute one gradient vector and apply it to the colors for each irradiance? Any help would be appreciated… Camil

I wrote about rotational gradients in the next post: http://www.rorydriscoll.com/2009/01/24/irradiance-caching-part-2/

I never wrote up my translational gradients post, but as Richard mentions above, Practical Global Illumination with Irradiance Caching is a good read if you’re interested in this.

http://www.amazon.com/Practical-Illumination-Irradiance-Synthesis-Animation/dp/1598296442/ref=sr_1_1?ie=UTF8&qid=1372297709&sr=8-1&keywords=Practical+Global+Illumination+with+Irradiance+Caching